I’ve worked on or in consumer-facing chat apps for over seven years now, seen some startling successes, new technology innovations, good chat, bad chat, and some frustrating cases that, despite great ideas, founders and software engineers, just don’t work, not very well.

Good: Digital Banking Assistant

The “intelligent digital engagement platform” is a bot instead of a banker. Users get customized, personalized help. The bank gets interaction with customers without brick and mortar, or, in Covid times, without anyone walking into a physical bank branch.

Why it works A: They basically recorded loads of interactions, made a repository of them, with links to user actions, like “check balance” or “open an account.” The chat engine makes the conversation sound more or less like a human conversation, by using predictive text, like the ChatGPT module you’ve probably played around with.

Why it works B: It’s an extremely narrow domain. The platform is only looking for things this bank and that customer can do. For software engineers, this means a relatively simple set of rules and decision trees.

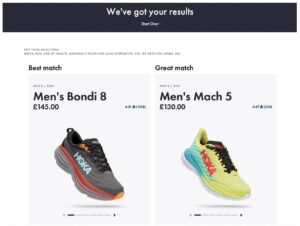

Good: Product Chooser

A real-time product recommendation system. The conversational app walks (pun intended) the user through a series of questions in order to find the right pair of shoes. The bot asks questions, gathers feedback, feeds it all into the recommender system, and spits back a product or list of products. It’s conversational, it’s machine learning, but there’s not a whole lot of AI.

Why it works: Narrow domain, finite data set (finite number of shoes it can recommend). Simple machine learning: it describes a set of alternatives and predicts based on the user and the dataset (all other users, what the manufacturer suggests, etc.). The “intelligence” is easy: if a customer clicks the “buy” link, we consider that a success and tag the recommendation flow.

Ugly: Online Mental Health Guide

The app tries to replace the first few sessions with a mental health therapist, armed with standard mental health intake questions, and a lot of data about suggested courses of action as well as machine learning (what worked and what didn’t).

What does not work: The engine cannot discern emotions, cannot really learn. We have the questions (“how are you feeling today?”) but we cannot parse the answers, because the user doesn’t use standard answers. Consider the words “depressed” or “blue” or “sad.” To a practiced therapist, these words will spur additional questions, and they’ll be able to work out the diagnosis and make recommendations accordingly (app, podcast, online therapy, group therapy, etc.) But it’s very difficult indeed to train an app to think like that. They chat nicely; they simply don’t understand what is being said to them. Add to that the complexity of moral thinking, compassionate conversation, the perils of talking to people who feel “suicidal”, and you get a sense of why it works some of the time, but nowhere near all of the time.

It’s Not Easy

We’ve come a long way since the days of Eliza, the grandmother of all conversational chat bots. But that doesn’t mean the end is in sight, nor does it mean that chat bots can do everything. They can’t. Read Cory Doctorow’s explanation in “Conversational” AI Is Really Bad At Conversations. Noam Chomsky explains the perils and pitfalls of conversational AI chat in The False Promise of ChatGPT.

•••

Are you interested in conversational AI chat? Let us know.